Vox clamantis in deserto

Michi Trota: The public pays a pile for Big Tech’s data centers

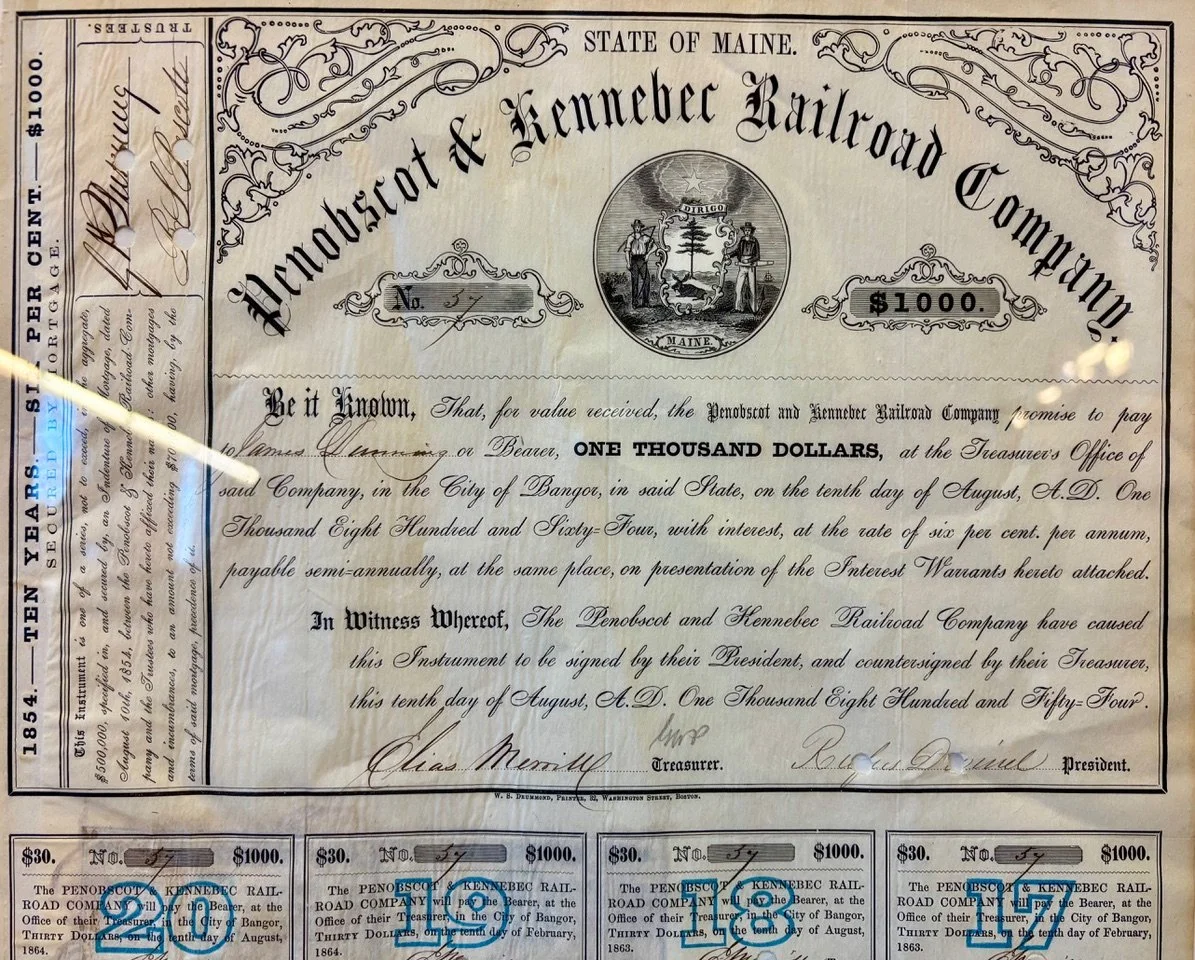

Servistar’s proposed huge data center in Westfield, Mass. It would require massive amounts of electricity.

The Massachusetts Green High Performance Computing Center, in Holyoke, a collaboration between several universities and corporate sponsors. including the University of Massachusetts, MIT, Harvard, Boston University, and Northeastern, and Dell ENC as well as Cisco.

Via OtherWords.org

Bill Gates recently made headlines by suggesting that climate change is no longer a priority, but the American public begs to differ.

In this last election, climate change was a defining issue in such states as Virginia and Georgia, where voters grappled with rising energy costs. And no matter how much tech billionaires try to distract us, increasing power costs and our worsening climate are directly connected to such corporations as Google, Meta, Microsoft, and Amazon racing to dominate the AI landscape.

According to the U.S. Energy Information Administration, the price of energy has risen at more than twice the rate of inflation since 2020, and Big Tech’s push for more power-hungry data centers is only making it worse.

The data centers proliferating across the country drive up energy costs by powering energy-ravenous generative AI, cloud storage, digital networks, and other energy intensive programs — much of it fueled by coal and natural gas that exacerbate climate change.

In some cases, data centers consume enough electricity to power the equivalent of a small city. The wholesale price of electricity in areas housing data centers is up a whopping 267 percent from five years ago — and everyday customers are eating those costs.

Americans are also shouldering increasing costs of an extreme climate.

The Joint Center for Housing Studies at Harvard noted that insurance prices rose 74 percent between 2008 and 2024 — and between 2018 and 2023, nearly 2 million people had their policies canceled by insurers because of climate risks.

Meanwhile, home prices have gone up 40 percent in the past two decades — meaning that the cost of home repair and recovery from climate disasters has also grown, all while wages remain stagnant.

Data centers aren’t just putting our wallets at risk. Power grids across the country are already strained from aging infrastructure and repeated battering during extreme weather events.

The additional pressure to feed energy-intensive data centers only heightens the risk of power blackouts in such emergencies as wildfires, deep freezes, and hurricanes. And in some communities, people’s taps have literally run dry because data centers used all the local groundwater.

Worse still, Big Tech’s AI energy demand has triggered a resurgence in dirty energy with the construction of new gas-powered energy plants and delayed shutdowns of fossil fuel-powered plants. The tech industry is even pushing for a revitalization of nuclear energy, including the planned 2028 reopening of Three Mile Island — site of the worst nuclear power plant disaster in U.S. history — to help power Microsoft’s data centers.

Everyday people bear the costs of Big Tech’s hunger for profits. We pay it in rising energy bills, our worsening climate, our lack of access to safe water, increased noise pollution, and risks to our health and safety.

It doesn’t have to be this way. Instead of raising our bills, draining our local resources, and destabilizing our climate, Big Tech could create more energy jobs, lessen our power bills, and sustain communities.

We can demand that tech giants such as Microsoft, Meta, Google, and Amazon uphold their commitments to use 100 percent renewable energy and not rely on fossil fuels and nuclear energy to power data centers.

We can insist that data centers only go where they’re wanted by ensuring communities are given full transparency and protection in how they’re affected by power usage, water access, and noise pollution.

The current administration is ignoring its obligations to the American public by refusing to rein in Big Tech. But tech billionaires still have a responsibility to the very public they depend on for their existence.

Michi Trota is the executive editor of Green America.

Ayse Coskun: AI strains data centers

Google Data Center, The Dalles, Ore.

— Photo by Visitor7

BOSTON

The artificial-intelligence boom has had such a profound effect on big tech companies that their energy consumption, and with it their carbon emissions, have surged.

The spectacular success of large language models such as ChatGPT has helped fuel this growth in energy demand. At 2.9 watt-hours per ChatGPT request, AI queries require about 10 times the electricity of traditional Google queries, according to the Electric Power Research Institute, a nonprofit research firm. Emerging AI capabilities such as audio and video generation are likely to add to this energy demand.

The energy needs of AI are shifting the calculus of energy companies. They’re now exploring previously untenable options, such as restarting a nuclear reactor at the Three Mile Island power plant, site of the infamous disaster in 1979, that has been dormant since 2019.

Data centers have had continuous growth for decades, but the magnitude of growth in the still-young era of large language models has been exceptional. AI requires a lot more computational and data storage resources than the pre-AI rate of data center growth could provide.

AI and the grid

Thanks to AI, the electrical grid – in many places already near its capacity or prone to stability challenges – is experiencing more pressure than before. There is also a substantial lag between computing growth and grid growth. Data centers take one to two years to build, while adding new power to the grid requires over four years.

As a recent report from the Electric Power Research Institute lays out, just 15 states contain 80% of the data centers in the U.S.. Some states – such as Virginia, home to Data Center Alley – astonishingly have over 25% of their electricity consumed by data centers. There are similar trends of clustered data center growth in other parts of the world. For example, Ireland has become a data center nation.

AI is having a big impact on the electrical grid and, potentially, the climate.

Along with the need to add more power generation to sustain this growth, nearly all countries have decarbonization goals. This means they are striving to integrate more renewable energy sources into the grid. Renewables such as wind and solar are intermittent: The wind doesn’t always blow and the sun doesn’t always shine. The dearth of cheap, green and scalable energy storage means the grid faces an even bigger problem matching supply with demand.

Additional challenges to data center growth include increasing use of water cooling for efficiency, which strains limited fresh water sources. As a result, some communities are pushing back against new data center investments.

Better tech

There are several ways the industry is addressing this energy crisis. First, computing hardware has gotten substantially more energy efficient over the years in terms of the operations executed per watt consumed. Data centers’ power use efficiency, a metric that shows the ratio of power consumed for computing versus for cooling and other infrastructure, has been reduced to 1.5 on average, and even to an impressive 1.2 in advanced facilities. New data centers have more efficient cooling by using water cooling and external cool air when it’s available.

Unfortunately, efficiency alone is not going to solve the sustainability problem. In fact, Jevons paradox points to how efficiency may result in an increase of energy consumption in the longer run. In addition, hardware efficiency gains have slowed down substantially, as the industry has hit the limits of chip technology scaling.

To continue improving efficiency, researchers are designing specialized hardware such as accelerators, new integration technologies such as 3D chips, and new chip cooling techniques.

Similarly, researchers are increasingly studying and developing data center cooling technologies. The Electric Power Research Institute report endorses new cooling methods, such as air-assisted liquid cooling and immersion cooling. While liquid cooling has already made its way into data centers, only a few new data centers have implemented the still-in-development immersion cooling.

Running computer servers in a liquid – rather than in air – could be a more efficient way to cool them. Craig Fritz, Sandia National Laboratories

Flexible future

A new way of building AI data centers is flexible computing, where the key idea is to compute more when electricity is cheaper, more available and greener, and less when it’s more expensive, scarce and polluting.

Data center operators can convert their facilities to be a flexible load on the grid. Academia and industry have provided early examples of data center demand response, where data centers regulate their power depending on power grid needs. For example, they can schedule certain computing tasks for off-peak hours.

Implementing broader and larger scale flexibility in power consumption requires innovation in hardware, software and grid-data center coordination. Especially for AI, there is much room to develop new strategies to tune data centers’ computational loads and therefore energy consumption. For example, data centers can scale back accuracy to reduce workloads when training AI models.

Realizing this vision requires better modeling and forecasting. Data centers can try to better understand and predict their loads and conditions. It’s also important to predict the grid load and growth.

The Electric Power Research Institute’s load forecasting initiative involves activities to help with grid planning and operations. Comprehensive monitoring and intelligent analytics – possibly relying on AI – for both data centers and the grid are essential for accurate forecasting.

On the edge

The U.S. is at a critical juncture with the explosive growth of AI. It is immensely difficult to integrate hundreds of megawatts of electricity demand into already strained grids. It might be time to rethink how the industry builds data centers.

One possibility is to sustainably build more edge data centers – smaller, widely distributed facilities – to bring computing to local communities. Edge data centers can also reliably add computing power to dense, urban regions without further stressing the grid. While these smaller centers currently make up 10% of data centers in the U.S., analysts project the market for smaller-scale edge data centers to grow by over 20% in the next five years.

Along with converting data centers into flexible and controllable loads, innovating in the edge data center space may make AI’s energy demands much more sustainable.

Ayse Coskun is a professor of electrical and computer engineering at .,Boston University

Disclosure statement

Ayse K. Coskun has recently received research funding from the National Science Foundation, the Department of Energy, IBM Research, Boston University Red Hat Collaboratory, and the Research Council of Norway. None of the recent funding is directly linked to this article.